In this blog post, I will be explaining how to implement near "Large Scale Near Line Search Indexing" using HBase and SOLR. This is a common scenario that is applicable in different use cases where you have large volumes (and on-going) of content updates that needs to be pushed in to live search indexes and the content should be instantly (near real-time) searchable. In addition, the content that needs to be stored and indexed might be unstructured or could be large enough that existing RDBMS solutions would not scale.

In such a situation, the master content could reside in a distributed key value columnar NOSQL store like HBase which provides horizontal scalability. For example, let's say that you have a high throughput website which gets a lot of new user generated content that you want to make it searchable. In these cases, you need a store that can support massive scale and also allows for flexible schema evolution. HBase lends itself well for these kind of usecases as it offers a distributed solution with extreme scalability and high reliability.

In this implementation, we will use Lily HBase Indexer to transform incoming HBase mutations to SOLR documents and update live SOLR indexes.

About Lily HBase Indexer

The

Lily HBase

Indexer Service is a fault tolerant system for processing a

continuous stream of HBase cell

updates into live search indexes. It works by acting as an HBase

replication sink. As updates are written to HBase region servers, it is written to the Hlog (WAL) and HBase replication continuously polls the HLog

files to get the latest changes and they

are "replicated" asynchronously to the HBase Indexer processes. The indexer analyzes then incoming HBase

cell updates, and it creates Solr documents and pushes them to SolrCloud

servers.

The configuration information about indexers is stored in ZooKeeper. So, new indexer hosts can always be

added to a cluster and it enables horizontal scalability

Installing and Configuring Lily Hbase Indexer

Installing the lily Hbase indexer in your cluster is very easy. If you are using a distribution like Cloudera (CDH), the Lily Hbase indexer is already included in the CDH parcels. If not, then the package can be downloaded, built and installed from Lily Hbase Indexer website. Please check here for installation steps if you want to do it manually.

Configuring Hbase Indexer

The Lily hbase indexer services provides a command line utility that can be used to add, list, update and delete indexer configurations. The command shown below registers and adds a indexer configuration to the Hbase Indexer. This is done by passing an index configuration XML file alsong with the zookeeper ensemble information used for Hbase and SOLR and the solr collection.

Hbase-indexer add-indexer

--name search_indexer

--indexer-conf /.search-indexer.xml

--connection-param solr.zk=ZK_HOST/solr

--connection-param solr.collection=search_meta

--zookeeper ZK_HOST:2181

The XML configuration file provides the option to specify the Hbase table which needs to be replicated and a mapper. Here we use morphline framework again to transform the columns in the hbase table to SOLR fields and we pass the morphline file which has the pipeline of commands to do the transformation

<indexer table=“search_meta” mapper="com.ngdata.hbaseindexer.morphline.MorphlineResultToSolrMapper" mapping-type="row" unique-key-field="id" row-field="keyword">

<param name="morphlineFile" value="morphlines.conf"/>

</indexer>

The Param name=”morphlineFile” specifies the location of the Morphlines configuration file. The location could be an absolute path of your Morphlines file,

Enabling HBase Replication

Since the HBase Indexer works by acting as a Replication Sink, we need to make sure that Replication is enabled in HBase. You can activate replication using Cloudera Manager by clicking HBase Service->Configuration->Backup and ensuring “Enable HBase Replication” and “Enable Indexing” are both checked.

In addition, we have to make sure that the column family in the HBase table that needs to be replicated must have replication enabled. This can be done by ensuring that the REPLICATION_SCOPE flag is set while the column family is created, as shown below:

create ‘searchTbl’, {NAME => ‘meta’, REPLICATION_SCOPE => 1}

|

About Morphlines

Morphline provides a simple ETL framework for Hadoop applications. A morphline is a configuration file that makes it easy to define a transformation chain that consumes any kind of data from any kind of data source, processes the data and loads the results into a Hadoop component. Morphlines can be seen as an evolution of Unix pipelines where the data model works with streams of generic records defined by transformation commands based on a config file. Since Morphlines is a java library, it can also be embedded in any Java codebase. Here, we use it to transform the HBase cell updates and map them to fields in SOLR.

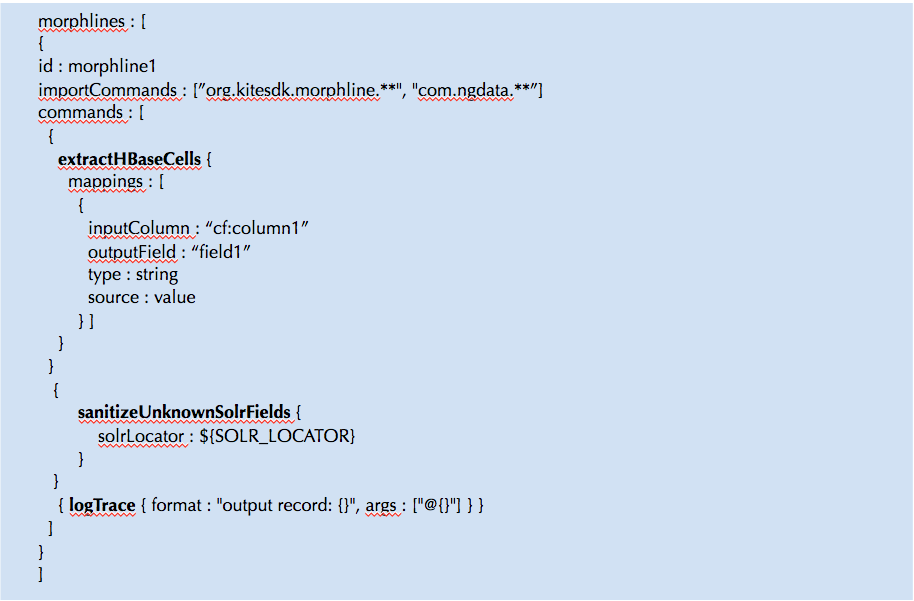

Using Morphines in HBase Indexer

I’m just showing here a very basic morphline file where the first command is extractHbaseCells which is a morphline command that extracts cells from an HBase Result and transforms the values into a SolrInputDocument. The command consists of an array of zero or more mapping specifications.. We can list an array of such mappings here. The parameters for the mapping are:

- inputColumn – which specifies columns to which to subscribe

- outputFied – the name of the field where the data is sent

- type – the type of the field

- source – value specifies that the cell value should be indexed

The second command is to sanitizeunknown fields from being written to SOLR . The mapper that we used MorphlineResultToSolrMapper has the implementation to write the morphline fields into SOLR documents

Morphline support for Avro

Morphline comes with some handy utilities for reading and writing Avro formatted objects. This can be combined with the extractHbaseCells command to transform a kite avro formatted dataset persisted in Hbase as byte arrays. The readAvroContainer command Parses an Apache Avro binary container and emits a morphline record for each contained Avro datum.

The Avro schema that was used to write the Avro data is retrieved from the Avro container. The byte array is read from the first attachment of the input record. We can then use the extractAvroPaths command to extract specific values from an Avro object as shown in the example below:

The above snippet shows how you can transform avro formatted data written in HBase and map them to SOLR fields.

Setting up SOLR schema

In order to send the HBase mutations to fields in SOLR documents, we have to make sure the following setup is done:

- Setup the collection in SolrCloud or Cloudera Search

- Setup the field names in schema.xml

Once the solr collections are created, the Hbase indexer process can be started and you can see the Hbase mutations replicated and live solr indexes get updated (in near real time).

Hi Thanigai- I am trying out the hbase solr integration using the hbase indexer in ibm biginsights. The problem i face is that i dont see the require jars provided in the hbase indexer git hub site. I can see the CDH has all the required jars for hbase indexer within the package. Can you please help me in getting the jars. I get error like class not found AddIndexerCli

ReplyDeleteIt was really a nice article and i was really impressed by reading this Hadoop Administration Online Course India

ReplyDelete